In this article you will meet docker, the famous container engine. We will define its components, specify its benefits, discover what makes it so famous today and makes it an important key to the DevOps world through this introduction of Docker.

For a start, you may wonder:

What is Docker?

Docker is actually the most popular tool that allows us to build, deploy, and run applications using containers: Containers let us package our app with all the things it needs (dependencies, libraries, config files…) and ships it as a single package. And through that, our app can run on any machine with the same behaviour.

Docker was released in 2013, and today it is used by a lot of well-known companies such as Spotify, Netflix, PayPal, Uber! You can check the whole list from here.

Before passing to the next section,

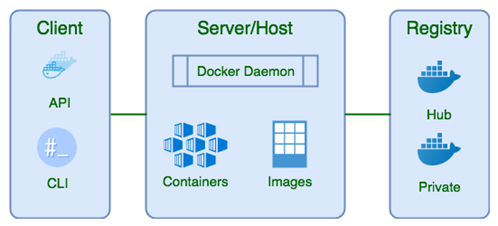

Let’s define docker’s architecture to better understand it. We will describe them briefly since this is only an introduction:

- Docker’s Client: it provides a command line interface (CLI) that allows us to issue build, run, and stop application commands to a Docker daemon.

- Docker Host: it is the server machine on which Docker daemon runs. Whereas the Docker containers are running instances of Docker images. Docker-Host can be Bare metal, VM image, iso, image hosted on some clouds etc.

- Docker-Daemon: responsible for all the actions that are related to containers.

- Docker-Registries: a repository for Docker images. Using the Docker registry, you can build and share images with your team.

- Docker Objects:

- Docker Image is an immutable package that contains the source code, libraries, dependencies. As its name says, it’s just a dead image of the containerized app.

- Docker Container is an executed instance of a docker image: it has a state (running, paused, exited…) and it can be modified.

- Networks: Custom networks through which all the isolated containers communicate. Docker has mainly 5 networks

-

- Bridge

- Host

- Overlay

- None

- Macvlan

-

We will specify each one of them in the next courses.

- Storage: it used to manage data inside the running containers, so if you want to persist your data, be able to modify them without accessing the container, or change Docker’s default data behaviour, you will need to setup storage driver. Docker offers four options for that:

- Data Volumes

- Volume Container

- Directory Mounts

- Storage Plugins

We will specify each one of them in the coming courses too.

And all these components mentioned above, constructs the architecture of docker:

How does docker work?

Docker works mainly using what’s called a Dockerfile: a Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image. So, in order to build images, Docker reads instructions from the Dockerfile line after line until the final image is constructed and saved.

In other words: once your Dockerfile is ready, you can launch the docker build command from the client CLI: this last will contact the docker Daemon, execute the Dockerfile instructions sequentially until the image is built. Once the image is ready, you can use the CLI again to run an instance of your image and you will get a container. You can also use the control commands on the CLI to start the container, pause it or stop it. And of course, you can save that image and push it to a public or private docker registry.

These are actually some basic operations you can do with docker because this is just an introduction. Docker has so many other features such as network and volume managing. We will keep these feature for another article, this one is only an introduction.

So, now you know what docker is and how popular it is, but…

Why should you use docker?

It would take long pages to enumerate the fabulous benefits of docker. But since that this is only an introduction if docker, we will only mention the main ones. You have the chance to discover the other ones through your own experience!

- Docker enables more efficient use of system resources: Apps in containers use less resources than a virtual machine. They are light-weighted and start up and stop really quickly.

- Docker enables faster software delivery cycles: as modern software must respond quickly to the market’s needs: they should be easy to update and extend. Docker containers makes it so simple to update the software versions and push it to production really fast. It also makes even faster to roll back to a previous version when needed by simply stopping a container and running another.

- enables application portability: since that a docker image is only a single package that will be launched using docker engine, that image can be stored in a docker registry and transferred anywhere to run the same way in your local machine and also in your production server.

- Docker shines for microservices architecture: Lightweight, portable, and self-contained, Docker containers makes it easier to implement microservices’ pattern by decomposing traditional, monolithic applications into separate services and containerize each one of them for fast and easy deployment.

Also,

Docker has marked its name everywhere: according to a recent indeed report, in the last year, job postings listing Docker as a preferred skill have increased almost 50%, which make it a must-have skill that you must learn today.

If you still aren’t convinced yet, Docker is actually supported by all orchestrating tools like docker swarm, Openshift and Kubernetes. To use these technologies docker must be in your pocket first. And to well implement Devops in your project, you should master docker as well.

So now that you are keen to learn docker, you can start from here with a set of tips and tutorials given by the docker itself.

I hope that you had a clear vision about what docker is through this short introduction of Docker. We will dive into docker more through the next articles and learn how to set it up and use it.