Virtualization and Containerization became such a revolution in the IT world. You may have heard a lot about virtual machines, or virtualisation and even containerization as these terms became so popular nowadays. In this article we will explain them briefly. We will take a quick look at their history, from the first prototype of virtualisation, until the modest technologies of containerization.

So what is the story of virtualisation?

Before diving into Virtualization and Containerization, we will start with a simple definition of virtualisation. Virtualization is the technology that lets you create useful IT services using resources that are traditionally bound to hardware. It allows you to use a physical machine’s full capacity by distributing its capabilities among many users or environments. The idea’s first appearance was in the 1960s: At that time computers were really rare and expensive, and developers couldn’t get a full access to them. And while they were entering data or checking an issue, the machine was idle which was unacceptable. So, to prevent this idle time, some ideas came to allow more than one user to use the computer: When one user enters data, the computer works with the tasks of other users. It will fill pauses and minimize idle time. This idea was called the time-sharing concept.

However

Systems supporting this feature were far from being perfect: they were slow, unreliable, and unsafe. So, in 1968 IBM created a new mainframe that supported CP/CMS (Control Program/Conversational Monitor System) system developed together with Cambridge scientists. It was the first OS supporting virtualization. And The CP/CMS system was based on a virtual machine monitor or hypervisor.

So, what is a virtual machine?

As its name simply says, a virtual machine (also known as guest machine) is just a “logical” computer or an “emulation” of a physical computer system which can provide the same functionalities as a real one. They run on physical computer (called host machine), behave like a physical computer and uses its allocated resources to run applications and its operating system.

But why do we use Virtual machines?

Since a virtual machine is just an emulation, it gives the chance to run multiple virtual machines on the same physical machine and each one uses its own virtual resources such as NVRAM, virtual disk file…

A virtual machine is also isolated from the host machine and cannot temper with it which offers many benefits to enhance security and isolate apps and data while performing tasks that can be risky for the host machine like accessing infected data or applying modifications to the operating system.

But this is not the end of the story!

Even though virtual machines solved too many problems, there were some issues that it couldn’t fix such as the famous phrase:

Where projects work on Dev for example and fail on production for so many reasons such as misconfigurations and versions’ mismatches. They turned to find solution in implementing Virtualization and Containerization

So, they came with this solution:

So, the idea is to build something similar to a light virtual machine called a “container” that can contain an application only with its dependencies and needed libraries.

Containerization made such a revolution and changed many definitions as that a container isn’t really a virtual machine but can do the same functionalities with less resources and more advantages.

To find out the roots of this revolution, let’s track it back to the beginning: the dawn of the History of virtualization and Containerization.

- In 1979: The idea of containerization firstly appeared with Unix V7 which came up with a mechanism that was considered as the beginning of process isolation with separating file access for each process, after the chroot system call was introduced by changing the root directory of a process and its children to a new location in the filesystem.

- Years later,in 2000: FreeBSD came to create a separation between the different services by partitioning a FreeBSD computer system into several independent, smaller systems called “jails” with the ability to assign an IP address for each system and configuration, and it was called FreeBSD Jails.

- The next year in 2001: Linux VServer came up with a jail mechanism that can partition resources (file systems, network addresses, memory) on a computer system. The virtualisation in this operating system was implemented by patching the Linux. But the last stable patch was released in 2006.

- Just three years after that in 2004: the first public beta of Solaris Containers was released that combines system resource controls and boundary separation provided by zones.

- The following year, in 2005: Open VZ was launched. An operating system-level virtualization technology for Linux which uses a patched Linux kernel for virtualization, isolation, resource management and checkpointing.

- Right after it in 2006: Process Containers (launched by Google) was designed for limiting, accounting and isolating resource usage (CPU, memory, disk I/O, network) of a collection of processes. It was renamed “Control Groups (or cgroups)” a year later and eventually merged to Linux kernel 2.6.24.

And the race became more and more intense!

- Two years later in 2008: LXC (or LinuX Containers) was created: it was most complete implementation of Linux container manager. It was implemented using cgroups and Linux namespaces, and it works on a single Linux kernel without requiring any patches.

- In 2011: CloudFoundry started Warden, using LXC in the early stages and later replacing it with its own implementation. Warden can isolate environments on any operating system, running as a daemon and providing an API for container management. It developed a client-server model to manage a collection of containers across multiple hosts. Warden includes a service to manage cgroups, namespaces and the process life cycle.

- In 2013: LMCTFY (or Let Me Contain That For You) showed up as an open-source version of Google’s container stack, providing Linux application containers. Applications can be made “container aware,” creating and managing their own sub containers. Active deployment in LMCTFY stopped in 2015 after Google started contributing core LMCTFY concepts to libcontainer, which is now part of the Open Container Foundation.

- In the same year: Docker entered the field and containers exploded in popularity. Until today, It’s no coincidence the growth of Docker and container use goes hand-in-hand. And it’s today the leader of Virtualization and Containerization.

Just as Warden did, Docker also used LXC in its initial stages and later replaced that container manager with its own library, libcontainer. But there’s no doubt that Docker separated itself from the pack by offering an entire ecosystem for container management.

We’ve took a little trip in the history of virtualization and the invention of containers. And I’m sure you’re thirsty to know more. So, let’s dig deeper and take a look at the details.

So, to a better understanding of containers: we will list the differences between them and virtual machines:

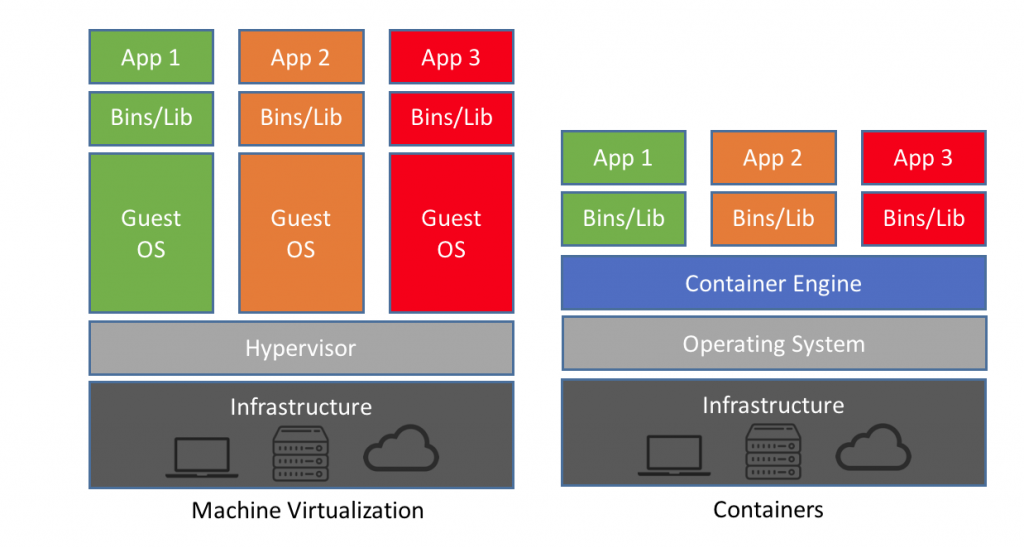

A virtual machine runs over a hypervisor (called also virtual machine monitor) which allocates its resources. However, containers are managed through what is called “Container Engine”. One of the most famous Engines is “Docker”, we will talk a lot about it in the next articles.

In addition, a virtual machine runs on top of its own full operating system. While a container requires an underlying operating system that provides the basic services to all of the containerized applications.

So, Containers aren’t just about isolation and reducing resources use they also offer numerous benefits. According to a recent study by 451 Research, it is expected that the adoption of containerization will grow up 40% annually through 2020 and this is because that containers are light, secure and also portable. You can copy containers through snapshots called “images” that can be modified, saved in “registries” and reused. You can check docker hub where you can find all the public docker images of all the applications you may imagine like MySQL, Nginx and even docker itself. There you can find official releases and also customized images built by docker community.

And still this is not the end!

As the containerization industry grew up, many features were being added each day. You can manage inter-containers network to assure their connectivity and isolate them from other networks. You can also manage volumes and mount them if you need persist your data outside of containers. And this is really important for database to prevent data loss on container’s shutdown. You may even create a cluster of containers that are fully connected and depending on each other. Nowadays you can find projects that are running thousands of containers in a complex architecture. To manage this complexity something called “orchestrators” was invented which made virtualization and containerization even better. They take containers’ images and configuration files as an input and do all the job for you. The most known orchestrator for now is Kubernetes ( called also k8s), which is developed by Google and used by it with a long list of well known companies such as Gitlab( you can check the list from here), we will also talk more about Kubernetes in coming articles.

So,

We took a little trip through the History of virtualization and Containerization, from the basic virtual machine, until the modern containers. We also specified the numerous advantages of containerization and we explained why it’s a today’s must that should be added to our applications.

In the next articles we will learn how to build images, run containers and also orchestrate them.